DCIM still matters – but it has to deliver and pay for itself this time.

Contents:

- Executive Summary

- Introduction

- So why has DCIM failed to deliver to date?

- Need for a more credible DCIM approach

- Why effective data center optimization demands a next generation DCIM capability

- Bringing new levels of data granularity to DCIM

- Leveraging innovative technologies to unlock the power of DCIM 2.0

- Five key steps to AI and machine learning-powered DCIM

- EkkoSoft Critical – AI and machine learning-powered data center optimization

- DCIM optimization on a continuous basis with Cooling Advisor

- 3DCIM from EkkoSense – Data Center Infrastructure Management done right

- EkkoSense – your partner for DCIM 2.0

Summary

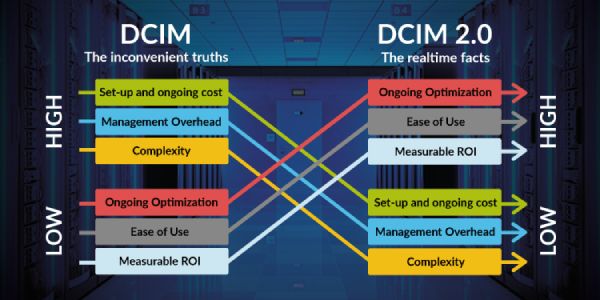

Since the Data Center Infrastructure Management or DCIM proposition was introduced by Gartner over a decade ago, the concept has struggled to mature. Worse than that, DCIM as a term has become increasingly tarnished following numerous disappointing deployment results.

Generally, DCIM has failed to deliver on the promises made – indeed in many cases it has turned out to be little more than a stronger Building Management System or an over-priced Asset Register. This has left many data center operations teams – and their CIOs and CFOs – quite rightly asking questions such as ‘what has DCIM ever done for us?’ and ‘where’s the return on our DCIM investment?’. Unfortunately, too many organizations with major DCIM projects in play find these questions just too difficult to answer.

DCIM has turned out to be great as a concept but less good when it comes to practical deployment in today’s busy data center environments. While DCIM and traditional monitoring solutions can do a good job of tracking assets within a data center, they only provide ‘moment in time’ data that is often inaccurate against real-time operating conditions. These legacy DCIM solutions do provide alerts when breaches occur, but they don’t support ongoing optimization. DCIM also typically demands significant resource to manage, largely because any analysis and interpretation has to be carried out by operators and can take months to action.

However, perhaps the key downside to DCIM and traditional monitoring deployments is that there is no measurable ROI for data center operations teams. Couple this with complex and lengthy installs and significant upfront perpetual license costs, and it’s hardly surprising that DCIM still arouses so much scepticism across the data center sector.

In this EkkoSense White Paper we revisit the original DCIM concept and definition, refocus on why Gartner felt it was so important at the time, and consider why – more than ten years further on – so many people feel that DCIM has failed to deliver. We’ll look at some of the key reasons for this disappointment, identify where DCIM started to drift, and examine what steps operations teams need to take place in order to help get DCIM back on track.

At EkkoSense we believe strongly that DCIM still matters, especially now at a time when data center operations are under more pressure than ever before. Across the data center community, it’s clear that the requirement for a proven DCIM offering – one that actually works – has never been stronger.

But if DCIM is to truly deliver on its original hype and provide data center teams with the full-suite functionality they are looking for, there really has to be an approach that helps to address both IT and M&E functionality. Any effective next generation DCIM will also need to be simple and fast to install, easy to operate, and provide operations with valuable analysis and insights into how to manage their facilities.

Such a solution should also have transparent pricing structures, be easy to map against the changing demands of today’s data center estates, and also deliver a clearly demonstrable, short-term ROI. And the good news is that this kind of innovative DCIM v2.0-style solution is now available.

Introduction

So what exactly is DCIM?

When analyst firm Gartner originally defined its Data Center Infrastructure Management (DCIM) concept, it described DCIM as a collection of tools that ‘monitor, measure, manage and/or control the data center utilization and energy consumption of all IT-related equipment and facility infrastructure components’.

While Gartner’s original DCIM definition focused on infrastructure management, in reality most vendor offerings focused instead on infrastructure monitoring with very little management. This is clearly an essential component of any effective DCIM solution, but it should only be the start of any successful infrastructure management process, Indeed, best practice DCIM should go way beyond merely highlighting a problem to actually offering solutions to issues along with ongoing management advice.

In its more recent definitions, Gartner goes on to outline some further DCIM characteristics, suggesting that tools should be designed for data center use and focused on optimizing data center power, cooling and physical space. And while advising that DCIM solutions don’t have to be sensor-based, indicates that they do have to be designed to accommodate real-time power and temperature/environmental monitoring. The importance of resource management support is also stressed, particularly in terms of extending the capabilities of traditional IT asset management to incorporate asset locations and their inter-relationships.

What did data center teams expect that DCIM should offer?

Over ten years ago, Computer Weekly wrote about how DCIM was playing a growing role in ‘data centre managers’ tooling strategies’. They then set out ten attributes that they felt that any serious DCIM system should offer:

- Data center asset discovery – including servers, storage and networking equipment, and also key facility systems such as power distribution units, UPS systems and chillers

- Advanced asset information – featuring data center equipment databases with ‘real-world’ energy requirements

- Granular energy monitoring – detailing how DCIM tools must be able to monitor and report on ‘real-time energy draws’ so that operations teams could identify spikes that might lead to potentially bigger problems

- Comprehensive reporting – recommending that DCIM tool dashboards should be able to support the information needs of both Facilities Management and IT/data center employees, with the ability to drill down through views to identify the root cause of problems

- Computational Fluid Dynamics (CFD) – applying CFD to analyze airflows and indicate where hotspots were likely to occur was seen as a vital component of ensuring that cooling could be deployed efficiently

- 2D and 3D data center schematics – graphical displays should help data center managers to visualize hotspots and establish whether they can be resolved by re-routing cooling. These should be active, with a DCIM tool that operates against live equipment data

- Environmental sensor manager – DCIM tools ‘must integrate with environmental sensors’ in order to alert data center teams when temperatures are exceeding allowable limits. They can act to either increase cooling or highlight any relevant underlying issues with equipment

- What If? scenario planning – to support proposed changes, it was recommended that DCIM systems should be able to not only show the likely result of any changes on power distribution and cooling, but also be able to advise on potential alternative approaches

- Structured cabling management – showing where cables should be installed, and how they can be placed to avoid airflow impedance

- Event management – where DCIM is able to initiate actions based on specific environmental conditions that it finds – whether that’s links to systems management software, security systems or trouble-ticketing applications

Computer Weekly’s prescription for a comprehensive DCIM offering was just one of hundreds of commentaries helping to fuel the DCIM discussion over a decade ago. However, what it clearly indicates is the ambition and scope that many observers felt was achievable through an active commitment to DCIM. Ideas such as granular energy monitoring, 3D data center visualization, real-time analysis and environment sensor management were seen as vital components of a successful DCIM strategy right from its inception.

What was DCIM expected to achieve?

Data centers exist to reliably and securely host the multiple compute, storage and network resources that collectively provide the enterprise and digital services that we all depend on in our daily lives. In its 2016 Magic Quadrant for Data Center Infrastructure Magic Quadrant, analyst firm Gartner suggested that all organizations with moderate-size data centers should consider investing in a DCIM tool – particularly if they had a cost-optimization initiative or were planning any significant data center changes.

Designed to surface critical information about data center infrastructure availability, airflow, temperature, humidity and power consumption, DCIM was seen as a way of preventing potential equipment problems and maximizing reliability. In collecting the information necessary to help operations teams manage their data center infrastructure more efficiently, DCIM tools were designed to help enable the better management of assets, changes and data center capacity.

Originally conceived to help data center operations teams prevent equipment problems and maximize reliability, traditional DCIM solutions quickly evolved to become highly complex – both in terms of use and maintenance. Designed to help operations teams simplify management and better manage their assets, the addition of more and more capabilities quickly turned DCIM into a resource-hungry distraction for many data centers.

While operations teams may have had access to key DCIM capabilities such as asset management, capacity management, change management, energy management and a range of monitoring options, many found it hard to translate these capabilities into benefits that could result in a tangible DCIM return on investment – specifically around resource overhead and a short-term ROI. In hindsight, it seems clear that many first generation DCIM solutions not only over-promised but also under-delivered.

So was DCIM over-hyped?

Since the original Data Center Infrastructure Management proposition was first conceived, it’s certainly the case that DCIM has struggled to mature. And for those organizations frustrated by the lack of success of their early DCIM deployments, there’s often a very real reluctance to get involved again.

In many ways the story of DCIM’s first ten years is a classic example of Amara’s Law – namely that “we tend to over-estimate the effect of technology in the short run, but under-estimate its effect in the long run”. As is frequently the case with emerging technologies, the delta between initial market hype and its immediate market impact can be significant. However, as these technologies evolve, the inverse quickly comes into play and people tend to under-estimate their impact and market significance – especially in the long-term. AI is a great example of this. It’s a concept that’s over 60 years old, yet we have only recently started to appreciate its true impact.

Today, in addition to AI and machine learning, we’re seeing a range of key technologies – such as Cloud, Internet of Things, Edge computing and Virtual Reality – all coming together to help unlock new levels of productivity and value for DCIM.

This couldn’t have come at a better time for data center operators. Indeed, faced with the escalating IT workloads that accompany digital transformation initiatives, operations teams are simultaneously being asked to reduce their energy usage (initially to help address corporate Net Zero goals, but also now to help address the very real problem of escalating energy costs).

It’s going to be hard for data centers to meet both their workload and energy saving goals unless they can prioritize performance improvement across all their critical facilities. And that places a renewed focus on Data Center Infrastructure Management tools – but this time they have to deliver. Unfortunately, this is becoming much harder given the increasingly complex nature of today’s diverse IT estates. The management of in-house deployments, remote unmanned Edge sites, as well as public and private Clouds all combine to make the ongoing administration of these varied estates especially difficult to manage – and accelerates the need for smart DCIM-style tools to support operations teams.

So why has DCIM failed to deliver to date?

Since the introduction of DCIM, it’s probably fair to say that it has struggled to mature and deliver against both its initial promise as well as evolving data center requirements. A number of factors have combined to make it much harder to deliver successful DCIM deployments, however perhaps the most significant barrier to success has been an industry-wide failure to bring together the IT and M&E aspects of data center operations.

Bridging the gap between IT and M&E functions

A decade into Data Center Infrastructure Management, and it’s clear that initial DCIM hype around bringing the IT and M&E aspects of data center operations together never really happened. Designed simply to host compute, storage and network resources reliably and securely, data centers exist to support the many digital services that are now so integral to our daily lives. However, despite these services running on leading edge platforms, it’s still the traditional facilities management teams that manage and maintain the building and the critical supporting infrastructure within it.

And, while FM teams provide the secure space and support the delivery of the critical power and cooling resources on which installed IT equipment is totally dependent, they typically have no visibility of hosted IT equipment, its operation or management, or of plans to introduce or remove equipment as data center services evolve.

For most data centers, their compute, storage and networking equipment is generally looked after by IT teams that have little understanding or interest in the critical infrastructure providing the power and cooling that enables their digital services to run effectively. Because of this, it’s common to see expensive power and cooling resources being used inefficiently. With electricity being the largest input cost to a data center beyond the initial construction, this trend can no longer be ignored – particularly given today’s dramatically rising energy costs. Excess energy usage not only gets in the way of corporate net zero initiatives, but also potentially place organizations at risk when critical resources suddenly become depleted or unavailable. That’s particularly the case in facilities such as edge deployments where staff aren’t available on site to resolve potential issues.

Such a disjointed approach to data center management still appears to be a common operational structure, with responsibility for data center infrastructure management being shared across IT, facilities and M&E functions – each often with very different business goals. That’s why many of the original DCIM toolset vendors that came from the IT side of the fence failed to address the very real M&E needs of data centre operators – particularly in terms of overall energy efficiency and capacity management.

One of the main drivers of DCIM reluctance across the industry has been the lack of tools that enable operations teams to manage both their IT equipment condition as well as their critical facilities infrastructure at the same time. If DCIM is ever to deliver on its original hype – and give customers the breadth of toolset capability they are looking for – then it’s essential that there’s a genuine bridging of the gulf between IT and M&E functions.

However, the time when it was believed that a single DCIM tool could do everything to address these issues has long gone. Operations teams will require specialist tools to manage specific elements of their IT and M&E activities. Given this, it’s likely that an effective DCIM approach will evolve to incorporate a suite of tools that collaborate and potentially share data to create a far more comprehensive management solution than would ever be possible using a single product.

That’s why there’s now a real opportunity for DCIM to begin living up to its original hype. Aligning IT, Facilities, and other business stakeholders will help operations teams to take their data centers to the next level. And that’s particularly important as data center infrastructure accommodates the latest distributed and hybrid architectures. Indeed, the functionality historically promised for DCIM becomes even more important if data centers are to successfully optimize the performance of these ever more complex distributed environments.

Why DCIM deployment has proved challenging

It’s clear that implementing successful DCIM deployments that meet the initial expectations set out by analyst firms such as Gartner has proved challenging. In addition to the significant challenges caused by poor integration between IT and M&E, DCIM has also struggled in a number of other key areas, including resourcing, usability, complexity, and return on investment.

The cost of DCIM deployments has often proved too high for all but the largest data center operators. Some legacy tools could take months to deploy due to the complexity of the software and integration challenges, while the constant requirement to keep DCIM systems current and accurate invariably required extra resources. Many data centers also found it hard to recruit the right people to carry out critical DCIM monitoring and benchmarking work, a fact exacerbated by the growing skills gap over recent years.

Initial DCIM product deployment may have been expensive, but many data center operations teams also found themselves implementing extra resources to manage their DCIM complexity. Despite this significant investment, lack of functionality and the need for further spending have still led some organizations to abandon their DCIM projects due to their unsustainable complexity and escalating costs. For some, a key driver behind this decision would have been frustration and disappointment caused by solutions that simply didn’t provide the functionality they were expecting.

DCIM tools should of course be much more than a smart GUI for an asset management system, or an added value Building Management System. However, it’s also important that they are accessible and easy for operations teams to use. Platforms have to be simple and straightforward to install, easy to operate, and move beyond basic monitoring to provide data center teams with valuable insights into how their facilities are performing. At any stage it should be possible to access intuitive live views to help them manage against key performance metrics, while also giving them the visibility needed to identify and resolve potential capacity restraints.

The stark reality for many operators is that they don’t have access to the tools that can help them to make smart data centre performance choices in real-time. And although legacy DCIM tools are useful at helping teams to manage their facilities, many have found them limited when it comes to the kind of deep data analysis needed to really optimise performance at the mechanical and electrical level.

Importantly, DICM offerings also need to be quick to value so that operators can realise the benefits immediately without lengthy initial deployment phases and significant up-front costs. However, the combination of extra resourcing requirements and overly complex solutions has meant than most users of traditional DCIM solutions have experienced a lack of a tangible ROI on their DCIM investment.

Need for a more credible DCIM approach

Many companies spent huge sums on DCIM projects, and while some have been able to unlock value from their deployments, others felt that they had little choice but to abandon their projects midway. Perhaps a key reason for this is that DCIM was never really clearly defined, with its early hype around the promise of seamlessly marrying the IT and M&E aspects of a data center operation never materializing. Some of the bigger product vendors also significantly over-promised on their DCIM platform capabilities and usefulness, completely devaluing their DCIM proposition.

Market withdrawals

Over recent years, the DCIM market has undergone significant consolidation, with some vendors discontinuing their DCIM products and others leaving the market altogether – despite having previously invested millions of development dollars in their DCIM programmes. This clearly caused uncertainty for many data center customers, particularly as the challenges they were trying to solve with DCIM haven’t gone away.

And for those customers left with solutions provided by vendors that have now withdrawn from the market, there are decisions to make around when to come off specific platforms, whether to replace on a like-for-like basis, or actually taking a more positive step and re-examining how they can rethink their DCIM focus. At EkkoSense we believe there’s a great opportunity for these customers to re-evaluate their priorities with regards to their DCIM implementation – not just to swap one DCIM tool for another, but to now start benefiting from the real M in DCIM – the ability to Manage and optimize their data center operations.

The underlying reasons for DCIM haven’t gone away

So, there are good reasons why DCIM hasn’t been more widely adopted. Despite this, the data center management challenges that customers were trying to solve haven’t gone away. At a time when data centre operations are under more pressure than ever before, the need for a more credible DCIM approach – one that is accessible, affordable and actually works – has never been stronger. The DCIM functionality historically promised is even more necessary to help operations teams to manage ever more complex distributed environments, so there is still very much a need for tools to fill this niche.

This presents an ideal opportunity for DCIM to transition from its traditional IT asset management led approach and, instead, begin working towards a much broader, M&E-based capability. With innovation across key areas such machine learning, AI, remote monitoring, analytics and more accessible user interfaces, there’s a growing realization that it’s now possible to take DCIM through to the next level – looking beyond traditional monitoring and management to create true data center infrastructure management solutions that embrace both the IT and M&E spaces.

Three key factors driving an increased requirement for next generation DCIM capabilities

Problems experienced during the pandemic – particularly the challenge of getting people into data center sites – have re-iterated the need for stronger remote monitoring and optimization capabilities. It also highlighted the requirement to support already stretched operations teams with more comprehensive management tools.

At the same time, organizations are busy developing their own net zero transition plans that detail specific greenhouse gas emissions targets. With data centers now firmly established as one of the highest consumers of corporate energy, it’s imperative that operations teams work to secure quantifiable energy savings, Unfortunately, it’s difficult for data centers to know exactly how much energy they’re using in the first place. Research conducted by EkkoSense suggests that very few teams really know how their rooms are performing from a cooling, capacity and power perspective. This will require access to advanced DCIM-style solutions if data centers are to begin making significant contributions to corporate carbon reduction activities.

Additionally, there’s currently an increased pressure on data centers to address the issue of dramatically rising energy costs. Current hedged energy prices won’t last forever, and will quickly become outpaced by huge increases. Other than the very few data center teams that have already assigned capex for this financial year purely to mitigate rising energy costs, the only real alternative is to right-size the cooling that your data center infrastructure currently delivers. An effective DCIM approach will be essential here in helping to identify and realize potential energy savings.

Ultimately data center managers do not need what has been marketed as DCIM historically. Instead, they require a next generation ‘DCIM v2.0’ approach powered by smart management capabilities and a clearly demonstrable short-term ROI. However, any next generation DCIM solution will need to harness the power of machine learning and AI to ensure that operations teams aren’t only presented with issues as they occur. Far better to take advantage of software that can provide intelligent insights and recommended solutions before the issues even surface.

Why effective data center optimization demands a next generation DCIM capability

The most important thing a data center operator must do is meet the needs of a business and its customers. Availability and uptime must be 100% if services are to deliver the high quality experience that both customers and internal users demand. The second most important consideration is how efficiently it can deliver this availability and uptime – and by ‘efficiently’ we mean by using as little energy and as cost-effectively as possible.

And, given that data centers already consume around 1% of the electricity used worldwide – a number that’s on the increase – this has implications that extend far beyond the data center. Even the most conservative projections have data centers growing at 4.5% annually over the next five years, while some project a 20% annual growth. Our greater reliance on online services, continued digital transformation and the global spread of 5G connectivity are all driving this growth. The result is that we’re using data centers more and more every day, and it takes greater bandwidth to ensure we experience improved performance each time we upgrade.

To support these workloads, around 35% of data center energy is taken up by cooling, and EkkoSense Research estimates that around 150 billion kilowatt hours per year are being wasted through inefficiencies of cooling and airflow management in data centers. For operators, this translates to wasting over $18 billion a year. These are big numbers, and they are simply not sustainable from either a cost or a carbon perspective.

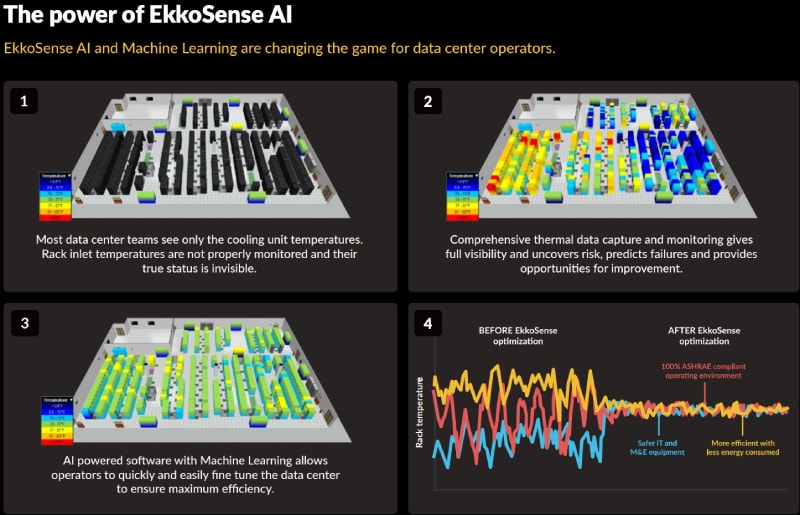

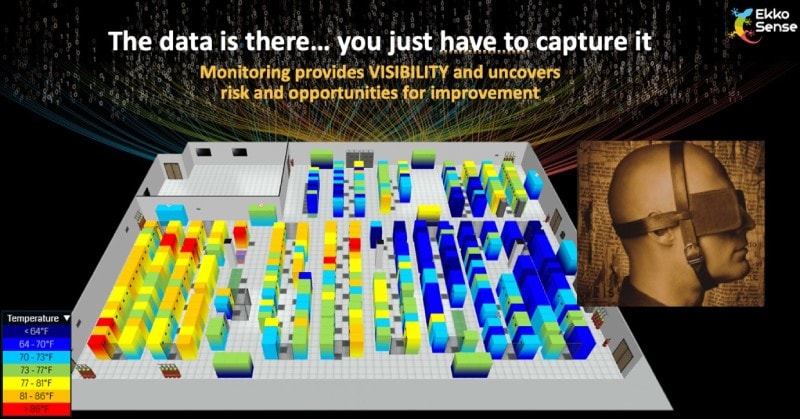

It’s difficult to unlock the kind of performance improvements that are needed to address these issues unless you know exactly what’s happening in your data center. EkkoSense data shows that less than 5% of all data center operators currently measure what’s happening at the rack level in their facilities. This means that when it comes to the actual IT cabinets, most operators are effectively blind to their data center’s thermal performance and power consumption. They know what temperature their cooling units are running at, but, as far as rack inlet thermal reporting is concerned, they’re almost entirely in the dark and hoping that all their individual racks are operating within an acceptable range – whether that’s according to ASHRAE recommendations or other measures.

EkkoSense research suggests that 15% of the cabinets within the average data center currently operate outside of ASHRAE standards. This introduces a significant level of risk into the data center that is entirely avoidable. Given that the hardware contained within a server rack can range anywhere between $40k and $1m in cost, it’s clear that just hoping each rack is performing well introduces material risk and isn’t a sound long-term strategy. It also emphasizes the requirement for a new generation of DCIM 2.0 capability.

DCIM must get simpler if it’s to succeed

Everyone talks a good DCIM game but, dig deeper, and the reality is that it has often failed to deliver. To achieve this, DCIM needs to get much simpler. Operations teams need to know what’s going on right now. That means real-time insight into all the equipment that’s currently running in their critical facilities, how much cooling power they are currently using and whether it’s actually all needed. They need to be confident that all their racks are 100% ASHRAE thermally compliant, and also have visibility into what’s happening beyond their wide space.

These are all questions that a true DCIM solution ought to be able to answer. But experience shows that all the early hype around DCIM – and its continued failure to seamlessly marry the IT and M&E aspects of a data center operation – make it just too much of a risk for organizations to trust their critical facilities management to legacy DCIM. Despite this caution, there’s still a pressing need for next generation tools to help manage today’s ever more complex hybrid environments.

DCIM 2.0 – offering genuine data center insight and intelligent management

For DCIM to truly deliver on its original promise and provide data center teams with the kind of functionality they need, it’s important to recognize that it’s unlikely that it will come as a single, off-the-self solution. Instead, what’s required is an approach that enables a blend of truly integrated, specialist tools that in turn can offer genuine data center insight and intelligent management capabilities. Unlike traditional DCIM offerings that only monitored equipment and triggered alerts to problems, data center operations teams need a DCIM 2.0 solution that actually offers genuine insights and intelligent management capabilities.

Such a new class of smarter and more integrated tools needs to connect to facilities systems, network systems, IT systems and orchestrate continual change within a modern highly dynamic data centre environment. A next generation DCIM implementation should also be a catalyst in getting all data centre stakeholders to work together around core business processes that involve people, facilities and IT infrastructure, as well as all the various assets and critical resources available within the entire data centre ecosystem.

Unfortunately, the reality is that – until recently – this kind of smart DCIM 2.0-style functionality simply wasn’t available. However, with the transition towards more distributed IT systems and Edge deployments – coupled with the broader availability of key AI, machine learning, cloud, Internet of Things and Virtual Reality technologies – there’s now a real opportunity to take DCIM forward to the next level.

Major DCIM deployments always seem to struggle with integration. This refers to both the discipline-specific tools deployed in a data centre, as well as the operational processes and procedures that allow effective day to day management and – importantly – when and how to automate. This integration is critical to effective management and historically has either been impossible or too technically or financially challenging to implement to the point that it has been actively discouraged. In addition, the increasing move towards distributed, digital infrastructures and hybrid environments has been one of the most significant shifts, which now necessitates the overview and orchestration of digital infrastructure and environments across multiples sites.

A true DCIM solution needs to provide data center operators with a single source of truth based on an integration-ready approach that provides an ability to share data across multiple disparate systems. Any integration requirements should not be a barrier to rapid deployment or speed and ease-of-use. It’s also important that any such DCIM 2.0 solution should not attempt to be all things to all people. Instead, opt for an approach that can seamlessly hook into existing systems to take advantage of any unique capabilities or datasets that may already be in place.

Ten core next generation DCIM requirements

While operations teams often had a clear understanding of the kind of DCIM support they really wanted, it’s only recently that DCIM solutions providers have been able to deliver the functionality that’s needed to deliver DCIM 2.0-class performance. Ten key requirements here include:

- The capacity to reference, monitor, maintain and manage complex hybrid digital environments

- Support for expanding Edge adoption and geographically remote ‘dark’ sites

- Integration with a growing number of data sources and existing toolsets

- Capacity to offer an extended analysis of multiple datasets to produce useful insights and management information rather than just basic monitoring

- Support for rapid, genuine integration at low cost in order to create a single, real-time source of facilities and IT insight for all sites and asset types within the entire data centre stack

- Increased visibility, capacity / resource planning and management, particularly for remote unmanned sites

- Increased granular monitoring and analysis to help the broader organization to address both corporate environmental, net zero and other sustainability goals

- The automated provisioning of solutions based on company specific rules and requirements, with the generation of detailed work orders that can be used by 3rd party contractors without supervision

- Increased operational efficiency to help unlock energy opex savings that offer a genuine DCIM ROI

Enabling a DCIM that delivers quantifiable ROI and energy savings

The combination of accelerated digital transformation and increased energy prices is placing increased pressure on data center teams, with a requirement to manage increasing workloads while also reducing energy usage to meet corporate environmental goals. While these seem to be conflicting goals, the reality is they can only be delivered through a serious commitment to performance optimization across all their critical facilities. The good news is that next generation DCIM’s ability to move beyond basic monitoring to deliver valuable insights on managing facilities is ready to help organizations improve their data center performance. Clear, intuitive live views help teams to manage operations against SLAs, KPIs and risk requirements, while the ability to view site-wide capacity constraints and energy usage provides real opportunities to optimize performance.

However, unless DCIM 2.0 is much easier to deploy and operate than its legacy predecessors, there’s a real risk that organizations will find it hard to unlock these benefits. In selecting a next generation DCIM approach it’s imperative to target solutions that are simple and quick to install, easy to operate and that will deliver valuable insights about how to manage your data center resources.

Here it’s necessary to identify solutions that will simplify infrastructure management for your data center teams. It’s a lot easier to identify value from your DCIM deployment if you can link directly to clear benefits such as improvements in thermal and power risk, optimized cooling capacity, and reductions in energy usage. Pricing structures also need to transparent and easy to map against the changing demands of data center estates.

Machine learning and AI create a new paradigm for data center operations

Perhaps the key reason for the inability of major data center teams to achieve this level of DCIM functionality has been that, until now, there have been no tools available that could deliver such a broad range of DCIM capabilities. As a result, most data centers continue to follow traditional operating models that have been in place for decades. The result is an environment that is built to support the assumed and anticipated needs associated with the IT workload. Flexibility and future capacity are typically designed in and, in many cases, built out. But, practically speaking, monitoring is focused on failures and alerts. The measurement of performance and understanding of the relationship between the IT load and the critical infrastructure on a continuous basis rarely occurs.

The primary reason for this is the sheer volume of data involved and the fact that most of the tools required to interrogate and interpret this data still rely on expensive human capital. By applying machine learning (ML) and artificial intelligence (AI) capabilities, however, data center teams can benefit from a new paradigm in how to approach data center operations. Data center teams leveraging machine learning to monitor their critical infrastructure and IT load can benefit by having better real time visibility of the operation, any potential risks and how to address them – while also reducing energy consumption and lowering operating costs significantly.

The good news is that by combining the latest generation of low-cost wireless sensors with accessible 3D visualisation techniques, and intelligent analytics engines based on machine learning and smart AI algorithms, this kind of next generation DCIM approach is now available.

Now with more IoT-connected wireless sensor devices, the sharing of multiple datasets and the capabilities of analytics engines we are entering a realm of true data center management – not simply highlighting problems as they occur but offering intelligent solutions based on machine learning and smart algorithms or before the issues even develop. Having access to intuitive real-time M&E Capacity Planning for your data center estate will allow you to run your operations much leaner. Such an approach will go beyond legacy IT-based DCIM tools to provide more tangible M&E insights into live space, power and cooling utilization.

Unlike DCIM solutions that can take years to implement, software-driven thermal optimisation gives data centre teams much faster access to the insights they need for less cost and less human management overhead. The result is exactly the kind of data-driven decision-making and scenario planning that lets them make the transition from simply monitoring critical facilities to identifying and actioning thermal, power and capacity opportunities.

That’s what we’re now delivering at EkkoSense, with an AI and machine learning led approach that doesn’t just highlight problems as they occur but actually delivers intelligent insights and recommended solutions before the issues even develop. And because it’s delivered under a Software-as-a-Service (SaaS) model, next-generation DCIM is already much easier to deploy than legacy DCIM approaches, with customers up and running quickly and time-to-benefit dramatically reduced.

Bringing new levels of data granularity to DCIM

The only truly reliable way for data center teams to troubleshoot and optimize data center performance is to gather massive amounts of data from right across the facility – with no sampling. This removes the risk of visibility gaps, but also introduces a new challenge in terms of the sheer volume of real-time data that is being collected. ASHRAE still only advocates measuring just one out of every three racks. While this will lower monitoring hardware costs, it can also introduce risks. Data center operations teams simply can’t assume that close proximity ensures they have a directly-correlated relationship between different racks. Each single rack is a contained thermal environment, and as such, each has its own thermal reality.

That’s why at EkkoSense we believe that for true DCIM cooling optimization it’s essential to monitor and report temperature and cooling loads much more granularly. To achieve this probably requires around 10x more sensors than are currently deployed in today’s typical data center. Indeed, EkkoSense’s own research suggests that just one in 20 M&E teams currently monitor and report actively on an individual rack-by-rack basis – and even less collect real-time cooling duty information.

The good news is that all the data is there, ready to be collected – operations teams just need to capture it. Unfortunately, traditional data gathering cost models have always made this an expensive exercise. That’s why at EkkoSense we’ve focused on disrupting this process using ultra-low-cost Internet of Things wireless sensors that allow sensors to be deployed in much higher numbers across the data center. More sensors ensure much higher spatial resolution – right down to the rack level. By putting sensors on every rack, you can not only better optimize your environment, but you can also ensure that you are alerted when a threshold is breached allowing for quick response

How granular do you need to be?

The average data center that EkkoSense instruments produces over 100,000 data points a day that are captured, interrogated, and correlated by the software. And with more and more monitoring data collected every day, we will have collected over 30 billion data points within the next year. This level of granularity translates directly into more reliable, higher quality data center optimization performance for operations teams.

It’s only when you combine this level of granular data with machine learning software that you can start to track operational performance in real-time. Pursuing AI-powered optimization requires a proven, safe process that’s based on thousands of real-time sensors and expert spatial models that combine to remove the uncertainty from optimization and provide all the critical machine learning data to power risk reduction and efficiency initiatives – effectively changing the game for data center operators.

Leveraging innovative technologies to unlock the power of DCIM 2.0

Five key technology trends are set to play a key role when it comes to unlocking the power of DCIM 2.0: AI and Automation; Cloud; Edge; Internet of Things and Virtual/Extended Reality. Each of these trends has an important role to play in helping organizations to realize DCIM 2.0 benefits – in contrast to data center performance optimization initiatives where legacy technologies and solutions always ended up being complex, technically heavy, and expensive to deploy.

Organizations planning the next stage of their DCIM strategy need to recognize how these technologies can be leveraged to unlock new levels of data center optimization across their operations. Key drivers here include:

- AI and Automation – business-driven machine learning and AI analytics is pervading the enterprise, but it needs to have the right governance in place

- Internet of Things – estimates project that around 29 billion IoT devices will be connected online by the end of 2022

- Virtual/Extended Reality – with organisations such as the IEEE predicting an increased use of ‘Digital Twins’ in the manufacturing and industrial metaverse

- Edge computing – global Edge spending is expected to reach some $176 billion in 2022 – up 14.8% according to IDC, with organisations particularly investing in Edge computing combined with AI and the latest application designs

- Cloud – Research from Gartner suggests that more than eight out of ten organisations will ‘embrace a cloud-first principle by 2025’

5 key steps to AI and machine learning powered DCIM

AI and machine learning will change the game for data center optimization, but if operations teams are to take full advantage of its potential, then it’s important that they take a structured approach to its deployment. At EkkoSense we recommend a clear five-step approach to AI and machine learning powered DCIM:

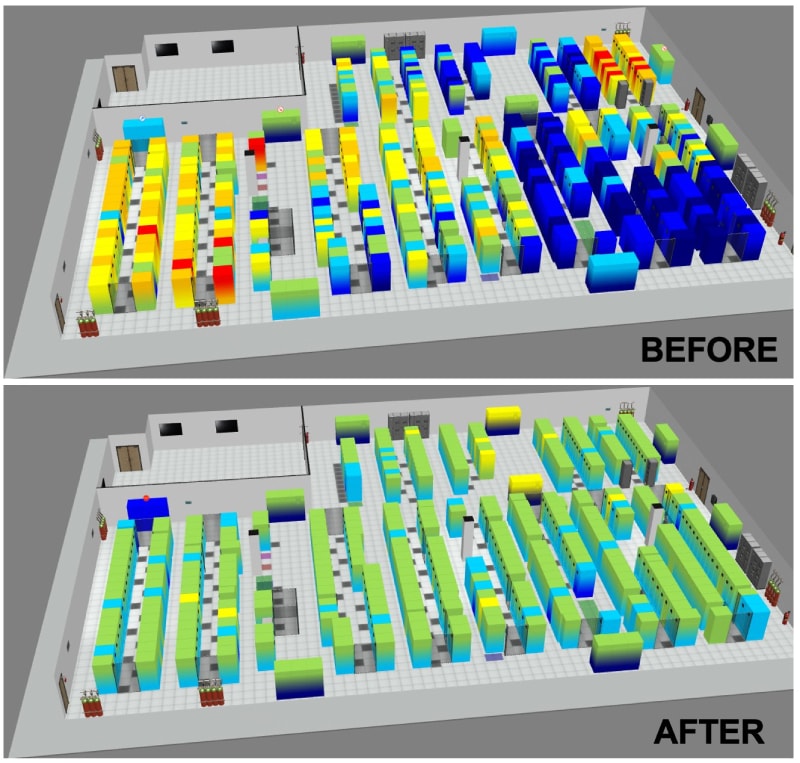

- Collect accurate cooling, power, and space data at a highly granular level – this allows you to observe how your data center is performing in real-time. The illustrations used in this document represent a room that would produce over 100,000 new data points each day – measuring what’s happening at the cooling units, rack temperatures, what the UPS is doing, what power is being produced… and then correlates all that data in real-time. And with the availability of the latest ultra-low-cost wireless IoT sensors, there’s really no excuse not to do this now.

- Visualize complex data easily and quickly – humans simply are not good at staring at complex spreadsheets and trying to extrapolate what they are saying. What’s needed is a new way to understand the relationships between all those data points, understand the Zones of Influence, and learn what impacts what. From a thermal management perspective, it’s a lot easier to look out for greens and light blues rather than scrolling through spreadsheets. With comprehensive 3D digital twin visualizations, data center teams can monitor and interpret this information quickly, allowing them to see exactly what’s happening across an entire data center estate, highlighting potential anomalies and displaying suggested airflow and cooling improvements.

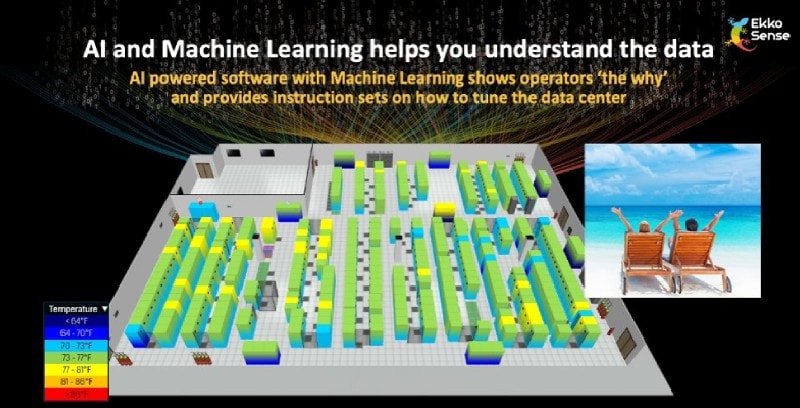

- Apply machine learning and AI analytics to provide actionable insights – augmenting measured datasets with machine learning algorithms provides data center teams with easy-to-understand insights to support real-time optimization decisions, backed by data for significantly improved cooling optimization and airflow management. AI and machine learning changes the game, taking all those complex datasets and crunching the numbers, doing in seconds what people would take weeks to attempt and most likely fail to achieve. With AI in place, data center teams benefit from fully correlated real-time data that’s presented in a distinctive, actionable way.

- Ensure delivery with actionable recommendations for human auditability – rather than rely on unwieldy automation solutions, EkkoSense advocates a light-touch approach to data center infrastructure management, with operations teams supported by AI-powered, actionable recommendations for greater human auditability. Expert data center operators still maintain control over decisions, recognizing that often they will have insights that simply weren’t available within the data sets. And because optimization recommendations are presented each time for human auditability, data center teams are always on hand to ensure that any changes are delivering the expected results.

- Deploy an ongoing continuous optimization approach – giving data center staff the capability for continuous optimization, while supporting them in keeping pace with their ever-changing critical facilities. Operations teams cannot optimize periodically and expect to stay in sync. Drift is natural, because regardless of how static data center employees believe their environment to be, change is always occurring. The machine learning algorithm is persistently monitoring and analyzing a team’s entire data center environment. It is always looking for ways to improve an estate’s operation, allowing teams to continually improve the way their facilities operate.

By following these steps, data center teams can benefit from an AI and machine learning-powered, software-driven optimization approach that unlocks significant benefits for data center operations. That’s why data center operations need EkkoSoft Critical – EkkoSense’s SaaS-based M&E optimization and capacity management solution – to help unlock the potential of AI and machine learning powered data center infrastructure management.

EkkoSoft Critical specifically provides a platform for the integration and management of a new generation of AI and machine learning enabled suite of DCIM tools that can collaborate and share data. This effectively creates a more comprehensive DCIM management capability than would ever be possible using a single product. For today’s data center operations teams, the combination of effortless collaboration, a low-installation overhead, and the added benefit of greater insight using shared data makes EkkoSoft Critical an ideal platform for turning shared data into useful management information using analytics, smart algorithms and AI.

EkkoSoft Critical – AI and machine learning powered data center optimization

Having put this approach into practice over the last five years at EkkoSense, it’s now possible to quantify the benefits of AI and machine learning powered data center optimization in action.

Traditional data center monitoring offered visibility and alerting but lacked the ability to provide the intelligence necessary to optimize data center operations. As a result, operations teams often learned about issues after they had occurred, providing them with very little time to react. While we have established that the only reliable way for data center teams to troubleshoot and optimize data center performance is to gather massive amounts of data from right across the facility, it also introduces a potential challenge in terms of the sheer volume of real-time data that is being collected. Operators have neither the time nor the expertise to interpret, calculate and act on thousands if not millions of data points in a timely manner and on a sustained basis.

That’s why at EkkoSense we focus on making it as easy as possible for data center operations teams to gather and visualize cooling, power and space data at a granular level. Our EkkoSoft Critical AI and machine learning powered SaaS platform combines with low-cost Internet of Things sensors and Doctorate-level thermal AI skills to facilitate the crunching of multiple complex M&E datasets to help operations teams support instant optimization decisions, always backed by data.

AI-powered optimization software not only shows what’s happening, but also why, allowing teams to make informed decisions on how to resolve issues. And, by introducing powerful algorithms that correlate the relationship between the critical infrastructure and IT load, they can materially reduce the rate of occurrence through persistent optimization. The software will observe changes in the environment in real-time and will often inform you that a failure is going to occur before it does.

The EkkoSoft Critical 3D visualization and analytics platform is particularly easy to use and understand. Data center operators can visualize airflow management improvements, manage complex capacity decisions, and quickly highlight any worrying trends in cooling performance. So instead of the blinded view provided by most legacy DCIM infrastructure management systems, you actually get to see what’s happening across your data center floors in real-time.

Operations teams might not initially like what they see. Red racks mean the temperature is too hot. That’s risk. That’s problems. When the internal sensors within servers detect too much heat, they will fight back. They will spin up their internal fans to try to reject the heat. They will start turning off services to lower energy consumption to try to lower the heat being created. This risks both the serviceable life of the server hardware, and negatively impacts the performance of the servers and their ability to meet business needs.

The path to recovery starts with understanding what’s happening and acknowledging that there are thermal issues that need resolving. It’s here that machine learning and AI can change the game for data center teams. Combining the power of artificial intelligence with real-time data from a fully-monitored room enables the creation of a comprehensive data center digital twin – one that not only visually represents current cooling, power, and thermal conditions, but that also provides tangible recommendations for optimization. This level of decision support can help operations teams take things to the next level, as the EkkoSoft Critical software will also recognize when changes have been made.

Powerful correlation engines learn how your data center is operating, why it operates that way, and shows what can be done to improve things. EkkoSoft Critical is able to analyze multiple complex data sets simply and quickly and display it in a 3D environment, empowering data center operators with a real-time view into the operations performance. refresh the digital twin to reflect updates in real-time.

EkkoSoft Critical’s powerful thermal analysis capabilities mean that operations teams can also see when their rooms are being overcooled. Blue racks in EkkoSoft Critical’s 3D visualizations mean that facilities are being overcooled. If your data center is too cold, you’re adding humidity to the mix. That’s water, and water and electronics don’t mix. Allowing excess humid air to run across units to cool them risks shortening the serviceable life of the servers. However, the good news is that once teams have access to this kind of granular visibility, they can start to do something about it.

Unlocking quantifiable data center performance optimization benefits

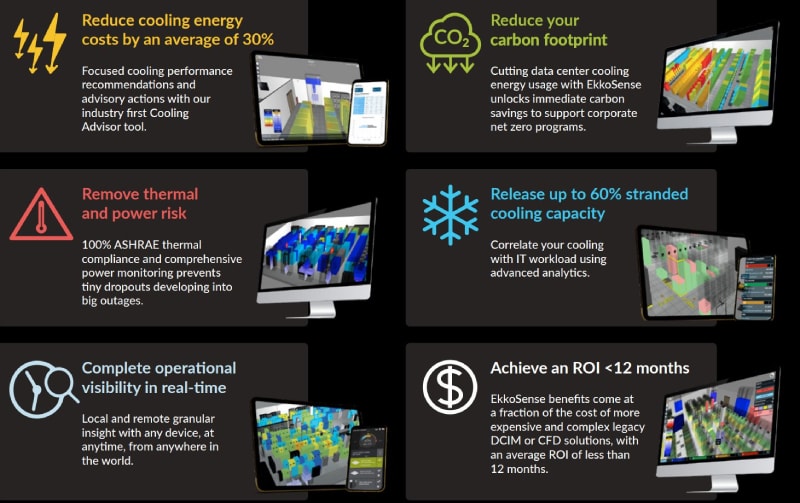

Organizations working with EkkoSense are now able to see the benefits of AI and machine learning data center performance optimization in action. In contrast to thermal consultants who may just suggest potential improvements, operations teams working with EkkoSoft Critical benefit from machine learning insights drawn from over 15 billion raw data points covering power and thermal readings. Key benefits include:

- Cutting cooling energy consumption by an average of 30%

- Unlocking up to 60% stranded cooling capacity

- Reducing thermal and power risks, enabling 100% ASHRAE thermal compliance

- Providing complete data center operational visibility in real-time

- Helping operations teams to make informed decisions, backed by data and with real-time visibility to the impact of those changes.

- Securing data center energy savings to help reduce your carbon footprint

- All with a typical ROI of < 12 months – and at a fraction of the cost of more expensive and complex legacy DCIM or CFD systems

Through its proven ability to unlock cooling energy savings, EkkoSoft Critical significantly reduces cooling equipment capex investment requirements, and delivers a valuable contribution to corporate NetZero commitments through sustained carbon usage reductions in the data center. The EkkoSense solution also provides an attractive business case as it enables true real-time M&E Capacity Planning for power, cooling and space – at a fraction of the cost of more expensive and complex DCIM solutions.

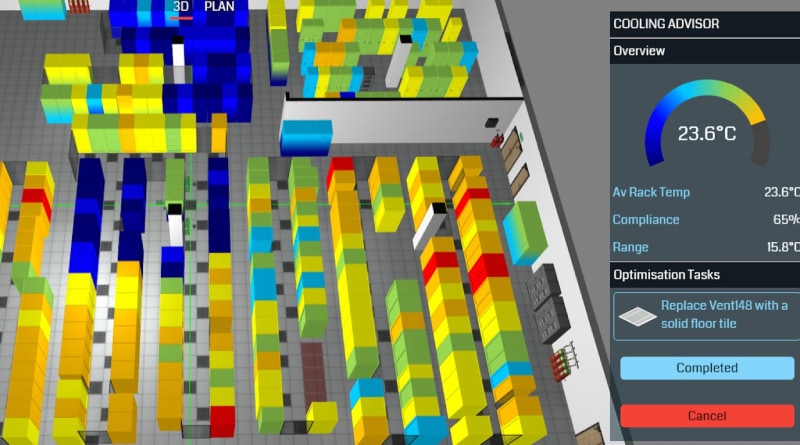

DCIM optimization on a continuous basis with Cooling Advisor’s AI-powered recommendations

Once initial optimization is complete, AI and machine learning algorithms continue to monitor and analyze the data center environment. EkkoSense’s unique Cooling Advisor machine learning and AI-powered advisory software tool provides operators with specific instruction sets on actions they can take to optimize their environment further and reduce risk on an ongoing basis.

Rather than relying on unwieldy automation solutions, Cooling Advisor enables a more light-touch DCIM approach, with operations teams supported by AI-powered, actionable recommendations for greater human auditability. Operators always maintain control over decisions, with the ability to discount Cooling Advisor recommendations where they have additional site insights. Instead of just monitoring and alerting, Cooling Advisor is able to translate data collected into valuable management information that enables data centres to stay optimized and secure both cooling energy and cost savings.

With Cooling Advisor, control and accountability stays with the operator, while removing guesswork from the decisions with results being visible in real-time. The result is a proven and safe process that’s based on thousands of real-time sensors and expert spatial analysis, enabling ongoing optimization, with the system persistently looking at ways to improve the environment, while also analyzing the impacts of any changes that have been made.

Acting on the clear recommendations offered by Cooling Advisor’s algorithms, data center teams can keep on track in their journey to secure cooling energy savings. Now it’s much easier for operations teams to deploy machine learning and AI techniques to make sure their critical facilities stay optimized on a year-round basis.

3DCIM from EkkoSense – Data Center Infrastructure Management done right

Traditional DCIM has always been hampered by inaccurate data, with most of the solutions classed as DCIM in reality only offering very basic M&E and asset management capabilities. It doesn’t have to be that way.

Think about what DCIM ought to deliver. The ability to optimize performance across your entire data center infrastructure – not just the racks inside it, as well as an asset management capability that reflects what’s populating your critical facilities today rather than a catalogue of what might have been there 18 months ago. Data center operations teams cannot get a full picture of what’s going on unless they have immediate visibility of all their assets – and true, real-time operational insight into capacity, power, and cooling performance.

That’s where 3DCIM – EkkoSense’s integrated alternative to traditional DCIM – delivers. 3DCIM features the latest technology integration from EkkoSense and ASSETSPIRE to make enterprise-class DCIM available at a fraction of the cost of traditional, outdated, overpriced and difficult-to-manage alternatives.

The 3DCIM offering brings together two intuitive, best in class cloud-based data center performance optimization and management solutions:

- With its proven EkkoSoft Critical 3D visualization and analysis solution EkkoSense makes it simple for operations teams to collect granular, real-time thermal, power and capacity data

- ASSETSPIRE’s customizable SPIRE asset control platform that enables the fast and accurate capture of any data center asset and is particularly easy to-to-use and keep up-to-date

Combining SPIRE with EkkoSoft Critical enables operations teams to track all their data center assets, monitor their operation at a granular level, and then visualize real-time performance using EkkoSense’s intuitive 3D visualization technology. And thanks to EkkoSense’s machine learning and AI models, the combined 3DCIM approach to Data Center Infrastructure Management not only highlights potential problems as they occur, but also offers clear recommendations to help resolve issues before they have a chance to develop. It’s DCIM that works without all the hassle and at a fraction of the cost of traditional solutions. 3DCIM effectively gives them access to the answers that much more expensive and complex DCIM solutions consistently fail to deliver.

Both 3DCIM solutions benefit from a SaaS delivery model, a flexible architecture and API integration – enabling self-installation timescales of days, not weeks, bringing benefits to customers almost immediately. Key 3DCIM capabilities include:

- Full Remote Access and Visibility – deploying 3DCIM brings real-time visibility of both data center cooling, power and capacity performance as well as comprehensive asset management, with full remote access via mobile devices for 24x7x365 peace of mind and reduced risk

- Unlocking new levels of accuracy and granularity – 3DCIM enables new levels of accuracy and granularity, with SPIRE’s ease-of-use supporting 100% asset accuracy and EkkoSoft Critical’s comprehensive sensing unlocking significantly greater granularity than legacy DCIM approaches

- Enabling flexible SaaS architecture with full API integration – Both EkkoSoft Critical and SPIRE feature a SaaS delivery model and a flexible architecture

- Highly customizable asset control platform – ASSETSPIRE’s SPIRE customizable asset control platform accelerates the fast and accurate capture of any data center asset, serving as a centralized data management solution across DCIM, CAFM and CMDB environments

- Facilities Management support – 3DCIM’s next generation infrastructure management offering goes beyond data center white space to embrace key CAFM, CMDB, BMS and Mini-BMS environments – with full real-time visualization making 3DCIM an ideal optimization and asset management solution for operations teams

- ROI in < 12 months – 3DCIM benefits come at a fraction of the cost of more expensive and complex legacy DCIM solutions, with an average ROI of less than 12 months achievable through data center cooling energy savings, optimizing capacity, smarter asset management and reducing the need for additional cooling equipment Capex spending

For DCIM to truly deliver on its original promise, the integration of best-of-breed products that meet both the exacting demands of the M&E and IT teams is the way forward, and it’s an approach that allows EkkoSense to build out its 3DCIM offering to meet any DCIM requirement. The result is a flexible SaaS solution that delivers enterprise-class DCIM performance at a fraction of the cost of legacy, overpriced and difficult-to-manage suites. No more failed software procurements, just a flexible SaaS solution with full API integration that delivers next generation DCIM functionality with a typical ROI of less than 12 months.

EkkoSense – your partner for DCIM 2.0

EkkoSense is a rapidly growing global SaaS company that has become an industry leader in software-enabled thermal performance optimization across critical facilities. EkkoSense’s unique software, EkkoSoft® Critical, utilizes artificial intelligence and machine learning to provide real-time visibility and data-driven recommendations for data center operators.

EkkoSense is a global software leader in the provision of AI and VR-driven performance optimization for critical live environments. The company’s distinctive mix of advanced sensing technology, SaaS DCIM-class visualization & monitoring software, and analytics solutions helps data center operators to achieve 100% ASHRAE thermal compliance, and maximize their estate infrastructure by releasing stranded cooling capacity. EkkoSense customers secure an average 30% data center cooling energy saving following deployment of the company’s EKkoSoft Critical solution, leading directly to a typical ROI of under 12 months.

Proven capabilities across key innovation areas – including Internet of Things (IoT), Edge, virtual reality and 3D visualization, digital twins, DCIM and machine learning & AI – enables EkkoSense solutions to take full advantage of next generation technologies. In parallel, EkkoSense’s deep expertise in key heat transfer and thermodynamics disciplines strongly influences EkkoSense’s solution design.

This means that the company’s EkkoSoft Critical data center optimization software always reflects current best practice thinking in critical facilities performance. For example, EkkoSense is a leader in the provision of advanced sensing technology, powerful SaaS visualization & monitoring software, and AI/ML powered analytics solutions for data centers and edge compute environments.

Driven by engineering first principles

Unlike traditional IT-led DCIM solutions, EkkoSense’s next generation DCIM data center optimization approach is always informed by engineering first principles. Core heat transfer and thermodynamics disciplines are central to how EkkoSoft Critical helps resolve thermal challenges. The company recognizes that optimizing data center performance goes way beyond IT, hence its deep understanding of energy efficiency and heat transfer across all aspects of critical facilities – from HVAC, building services and facilities management through to today’s revolutionary clean tech and energy efficient systems.

The EkkoSense team features a distinctive mix of leading industry practitioners offering decades of PhD-level data center thermal optimization and capacity planning expertise, industry-acknowledged experts in heat transfer and thermodynamics, leaders in the provision of clean tech and energy-efficient systems, as well as innovators in key technologies such as Internet of Things, gaming-class 3D visualization, digital twin systems, machine learning and AI.

This positions EkkoSense as the only organization to directly address the fundamental challenge of allowing operations teams to gather and visualize data center cooling, power, and space data at a granular level, while incorporating machine learning. We do this by bringing together an exclusive mix of technology and capabilities – including an innovative SaaS platform, low-cost Internet of Things (IoT) sensors, machine learning, AI analytics and PhD-level thermal skills. The result is EkkoSoft Critical – a 3D visualization and analytics platform that’s particularly easy for operations teams to implement, use and understand. The result is a solution that lets you visualize airflow management improvements, manage complex capacity decisions, and quickly highlight any worrying trends in cooling performance.

How EkkoSense is different

While DCIM and traditional monitoring solutions do a good job of tracking assets within a data center, they only provide ‘moment in time’ data that is often inaccurate against real-time operating conditions. These legacy solutions do provide alerts when breaches occur, but don’t support ongoing optimization. They also typically require significant resource to manage because the analysis and interpretation is left with the operators and can take months to deploy. Also, one of the key challenges of DCIM and traditional monitoring is that there is no measurable ROI.

In contrast, AI and machine learning powered data center optimization from EkkoSense changes the picture completely. Real-time AI-driven data collection and analytics shows operators not only WHAT is happening but also WHY, and provides prescriptive instruction sets to operators on HOW to make the appropriate adjustments. This empowers data center teams to make auditable decisions, backed by data while seeing the results of those actions in real time – helping to keep their critical facilities fully optimized. Deployment takes place within days/weeks, while time to value happens within weeks of implementation and typical EkkoSense ROIs are often within 12 months.

How EkkoSense’s AI and machine learning powered approach can make a difference

AI and machine learning techniques have been applied to optimize compute, storage, virtualization, and networking at the software layer for years, with efficiencies realized every day. However, when it comes to operating the data centers themselves – from the critical infrastructure (space, power, and cooling) to the IT load (the physical devices that go in the racks) – AI and machine learning has hardly featured.

The adoption however is under way, and early indications are that this approach will become the standard over the next few years. Google has developed an AI/ML solution that it has deployed over its entire data center fleet and have experienced tremendous results. On the colocation and enterprise side, hundreds of data centers have now deployed AI/ML solutions, and the results and impact are undeniable. This approach is proving itself across all classes of data centers.

Key EkkoSense benefits

Data centers are still largely designed to anticipated IT requirements and, as such, must incorporate a wide range of flexibility to deal with uncertain load requirements that will change materially over time. As a result, leveraging technology that can allow operators to optimize real time to the current operating state is critical. Operating at the wrong level to the current load requirements introduces risk, while wasting energy, driving higher than necessary operating costs while increasing carbon emissions.

Traditionally, as operators, we have tried to overcome this by leaning into our experience and expertise. But we have been limited by too little data, and we simply don’t have the time to analyze and interpret that data. AI/ML changes the game by solving that big data challenge, allowing us to incorporate that information into the decisions we make and to focus on implementation and execution.