Data center AI – A data center management guide from EkkoSense

Accelerate your time to AI compute benefits

Gen AI deployments: how ready are we really?

Navigating the AI gold rush in data center management

It’s a journey that organizations have either made, are making, or are about to make. There’s a pervasive fear of missing out, as stakeholders assess just how far they’ve progressed compared to everyone else.

And there’s also a fierce fight for available resources – it’s increasingly an AI gold rush as organizations look to secure their supply of AI compute resources – and the developers needed to build and train their AI applications.

In this eBook we’ll look at how this major AI reset is impacting the data center sector. We’ll then outline some ways that operations teams can derisk the deployment of AI functionality within their existing facilities.

Scale of opportunity leading to a scramble for AI resources

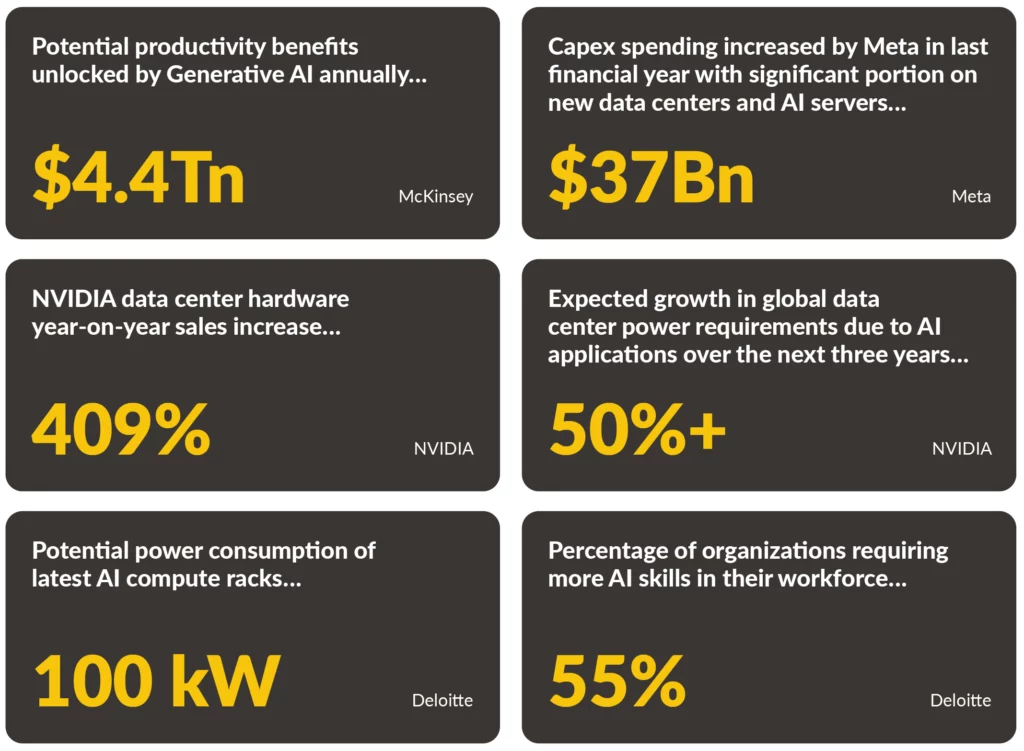

Sometimes it’s easy to under-estimate the sheer scale of the shift towards AI. Analyst firm McKinsey suggests that Generative AI has the potential to change the whole ‘anatomy of work’, and reports that it could impact key activities across customer operations, marketing and sales, software engineering and R&D by unlocking productivity benefits of up to $4.4 trillion annually.

This substantial impact is reflected in the significant investments pouring into data centers. Meta alone increased its capex spending to $37 billion during its last financial year – with a significant portion of that on new data centers and servers for AI.

We’re also seeing a broad industry platform transition towards the building of AI clouds, as well as AI support for enterprise platforms, and the creation of energy-hungry large language models for Generative AI-based applications. Consequently, there’s a huge demand for AI compute platforms from vendors such as NVIDIA – describe by Yahoo Finance as the ‘poster child of the AI frenzy’.

NVIDIA saw its data center hardware sales grow by some 409% year-on-year according to its latest figures. According to Dutch researcher Alex de Vries, NVIDIA’s projected growth and the AI applications it will support could see data center global power requirements jump by 50% within the next three years. And, with everyone looking to build bigger and more power-hungry AI models, this looks quite possible.

At the same time there’s huge pressure on getting the right AI coding skills on board. Deloitte reports that 55% of organizations know they need more AI skills but are unable to source them. AI coders are both rare and expensive – and most are already fully occupied, tirelessly coding and building models, applications and tools at an incredible rate.

What will all this look like from a data center management perspective?

Every organization wants a piece of the AI action. There’s real concern that if they’re not developing or launching some form of AI-powered proposition – whatever their own flavor of AI might be – then they could get left behind.

It’s just over 15 months since Generative AI applications such as ChatGPT became available, and since then there’s been a huge drive for organizations to develop and deploy their own GenAI-powered propositions.

For those busy with AI coding development, the infrastructure to support those activities already exists. There may be some concern about where to place and how to cool AI compute racks that have a much higher density than standard racks, but it’s all achievable. However, the issue comes when these AI applications go live and need to be rolled out on a much broader consumer scale. We’re all talking about Gen AI deployments, but how ready are we really?

It’s time to address some very practical data center engineering challenges

With the processing of GPU-intensive workloads such as AI clearly set to generate more heat within data centers, operations teams need to think hard about their current infrastructure and how it will need to evolve.

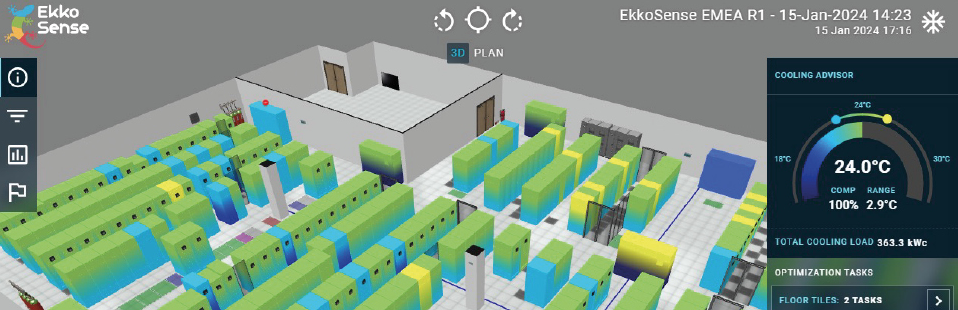

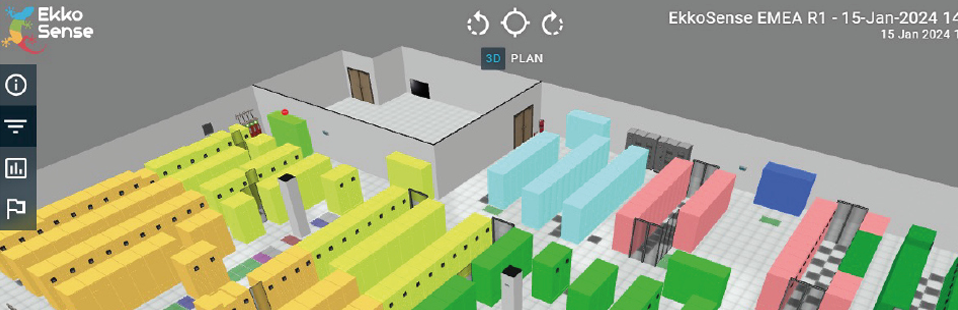

EkkoSense is using our AI-powered EkkoSoft Critical 3D visualization and analytics software to track this evolution.

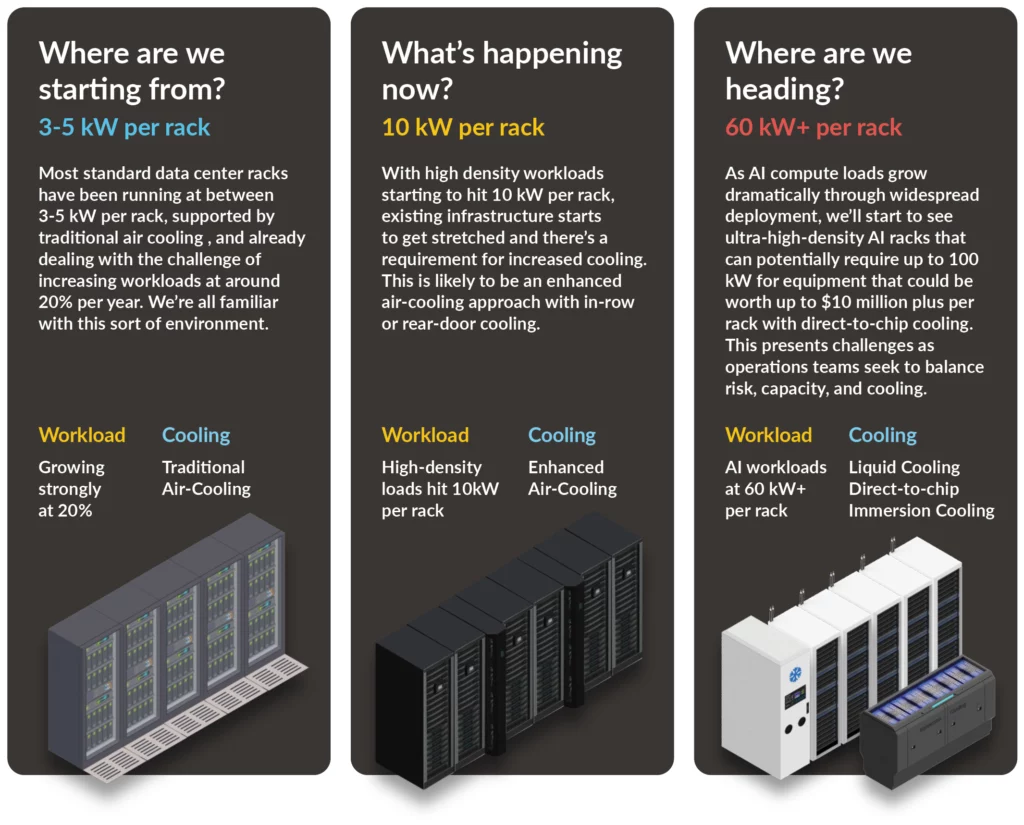

Where are we starting from? 3-5 kW per rack. Most standard data center racks have been running at between 3-5 kW per rack, supported by traditional air cooling , and already dealing with the challenge of increasing workloads at around 20% per year. We’re all familiar with this sort of environment.

What’s happening now? 10 kW per rack. With high density workloads starting to hit 10 kW per rack, existing infrastructure starts to get stretched and there’s a requirement for increased cooling. This is likely to be an enhanced air-cooling approach with in-row or rear-door cooling.

Where are we heading? 60 kW+ per rack. As AI compute loads grow dramatically through widespread deployment, we’ll start to see ultra-high-density AI racks that can potentially require up to 100 kW for equipment that could be worth up to $10 million plus per rack with direct-to-chip cooling. This presents challenges as operations teams seek to balance risk, capacity, and cooling.

When planning for the future of your data center, it’s essential to consider several key factors. These include finding the best cooling technologies, planning for higher density AI racks with their increased power and infrastructure needs, and deciding on future equipment investments and the projected lifecycle of your existing facilities.

Now is not the time for complacency. While you may have managed previous expansions with ease, the landscape is rapidly evolving. Heat loads are becoming more dynamic, presenting challenges unlike those posed by traditional workloads such as payroll processing.

Achieving seamless deployment of AI applications hinges on meticulous preparation. With the value of AI compute racks being paramount, it’s crucial to have robust infrastructure, comprehensive cooling solutions, and reliable battery backups in place from the outset. Investing in readiness ensures your data center can support the demands of AI workloads effectively.

To stay ahead, we utilize our AI-powered EkkoSoft Critical 3D data center visualization and analytics software to monitor this evolution closely.

Need for absolute real-time white space visibility

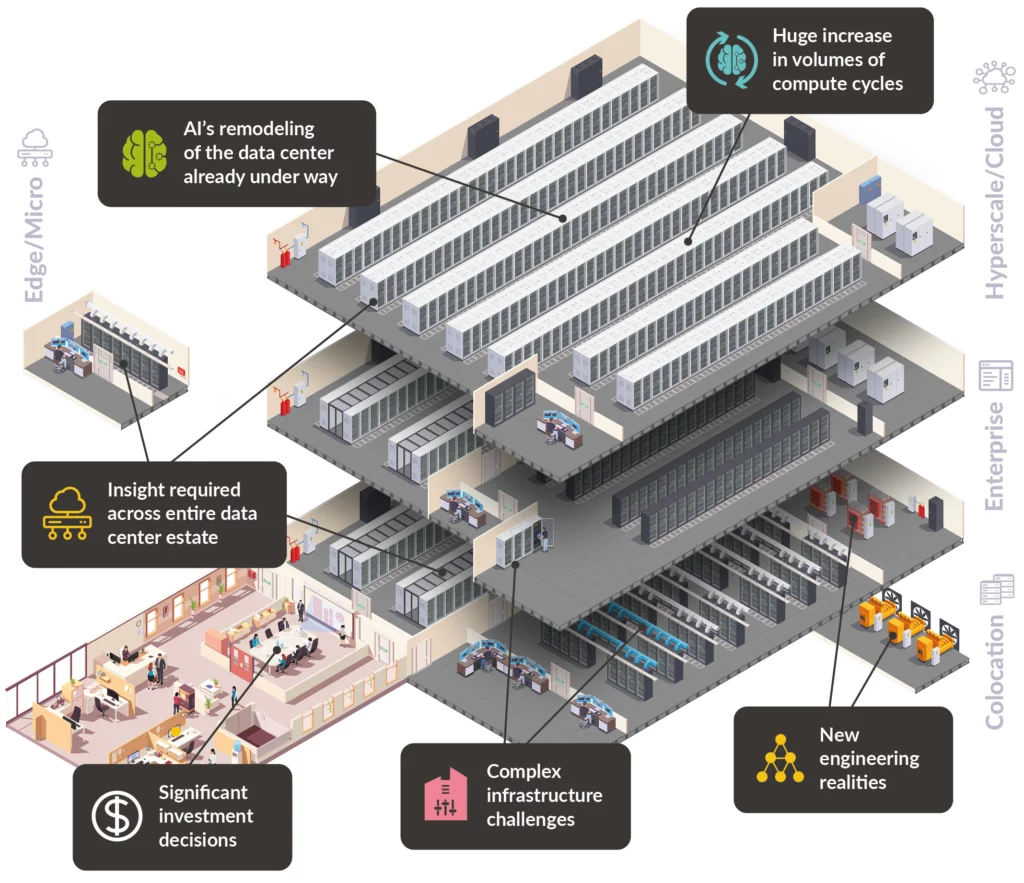

AI’s remodeling of the data center is clearly well under way. We’re already seeing that at EkkoSense – both through the sheer volume of compute cycles that our developers are now running on AI platforms, as well as developments at the many sites that we’re busy optimizing around the world.

What’s clear is that investment at this scale can quickly lead to new engineering realities – sometimes the sheer math involved can be frightening. And the infrastructure decisions you make now have the potential to constrain you by locking you into a particular approach.

Anything that we can do to help dial down the stress levels for data center teams is becoming even more important. Top of the list is knowing exactly what’s likely to happen from an infrastructure and engineering perspective when you launch your AI services.

So, what’s needed now is absolute real-time white space visibility. The ability to look across your whole data center management estate and check that the assumptions that you’re making on cooling, power and capacity are actually standing up. And, if they’re not, getting insights as early as possible so that you can address any issues.

Giving your operations team all the support they need

That’s where EkkoSense is helping – giving operations the insights and support they need to manage their AI deployments in their existing data centers as reliably and cost-effectively as possible.

At EkkoSense we recognize that the latest high-density AI workloads require absolute visibility of data center white space.

Our EkkoSense AI software helps by:

- Applying in-depth IoT sensing to bring new levels of accuracy and granularity to data center operations.

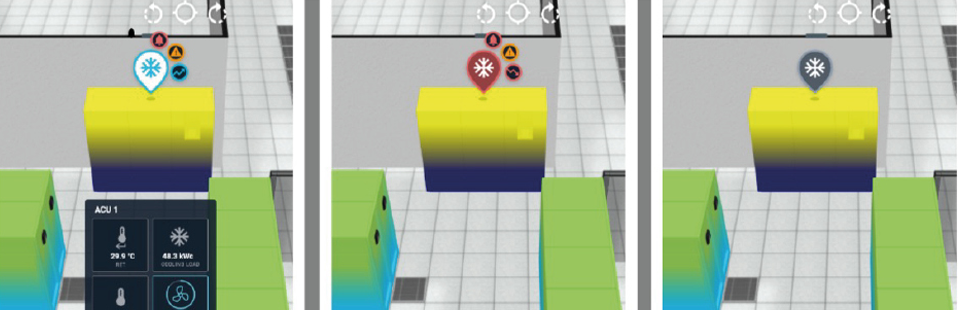

- Using AI-powered software to enable true real-time visibility of cooling, power, and capacity performance.

- This enables operations teams to fine-tune their data center performance for maximum efficiency.

Applying AI to give operations teams even more control

EkkoSense is now taking this even further, deploying AI insights to further support data center operations teams.

Data center operations teams are busy enough without having to wade through over-complex optimization data. Humans simply are not good at staring at complex spreadsheets and trying to extrapolate what they are seeing. That’s why we provide an entirely new way for teams to understand the relationships between the potentially 100,000 new data points that our software collects from a data center room each day.

Given ongoing data center resourcing issues, it’s also important for operations teams to take full advantage of optimization solutions that can help improve their productivity. Effective AI-powered optimization software helps teams stay on top of their escalating workloads and sustainability tasks – whether that’s accessing proactive thermal advice through our embedded Cooling Advisory tool, identifying potential issues ahead of time through Cooling Anomaly Detection, or automating the production of time-consuming ESG and sustainability reports.

The consistent application of our EkkoSense AI software delivers significant benefits for operations teams, with cooling energy savings of up to 30%, the ability to release additional capacity for IT loads, the ability to remove thermal risk and ensure 100% ASHRAE compliance, as well as securing quantifiable carbon savings to support corporate ESG initiatives.

Cooling Advisor – Using AI data analytics to provide actionable advice for continuous data center performance optimization.

Cooling Anomaly Detection – Identifying performance anomalies before potential equipment failure and enabling proactive maintenance.

Capacity Planning – Applying an AI analytics engine to enable the ongoing capacity management of site cooling, space and power for allocation and reservation across the full power chain.

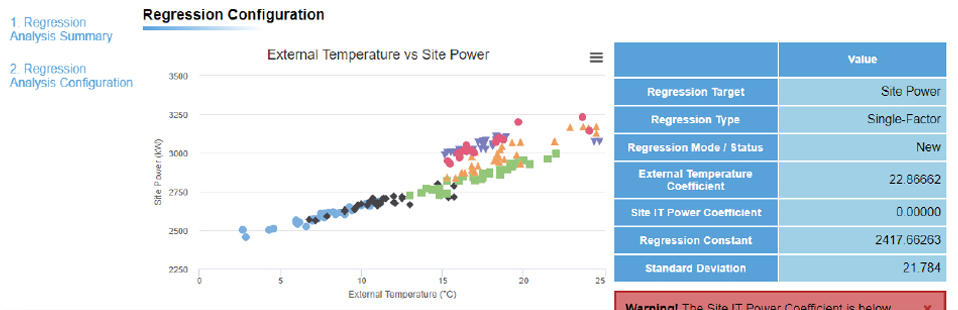

Intelligent Energy Tracking – Drawing on site data analytics and local weather data to compare actual site performance against its optimized state and identify performance divergence.

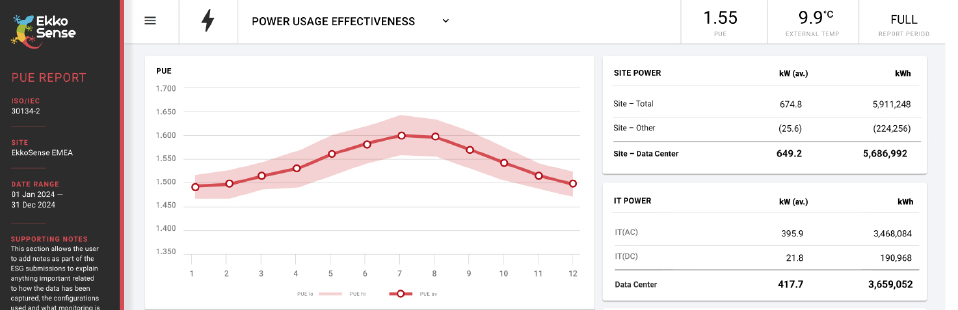

ESG Reporting – An embedded solution to collect, trend, analyze and report on ISO 30134 ESG Reporting requirements such as PUE, CER, CUE and WUE.

Beyond best practice data center management with EkkoSIM

Applying best practice optimization will help you to get your existing infrastructure operating as efficiently as it can – and help you to know exactly where you stand in terms of your current cooling, power, and capacity needs. And once you’ve got to this stage, the task is to make sure you maintain continuous optimization at a granular level – constantly rightsizing your loads and delivering for the business.

However, given the inevitable shift towards AI computing, there are now some more profound engineering questions that you’ll also need to answer as part of your data center management best practices, such as:

- How much more can you do with your sites as they are?

- How much IT load can I put into a space that has just been vacated without changing the infrastructure?

- What spaces can you use for high-density loads?

- What equipment will I need to replace? And when?

Given the sheer scale of AI computing, these are questions that need very precise answers. You can’t just keep absorbing a bit more load each time – that’s maybe possible when you’re adding 2 kW here or 3 kW there. But, with the latest AI compute racks potentially consuming up to 100 kW of power, it’s essential that you understand your site limitations.

Here’s where our next key development can make a difference to your data center management. EkkoSIM is an entirely new tool that draws on our 50-billion-point data lake to build precise infrastructure simulation models that take the guesswork out of data center planning.

EkkoSIM’s unlimited simulation capability lets you explore what-if scenarios and get a sense of exactly what’s achievable with your current infrastructure – and when you need to start thinking about extending your facility or begin work on a new build.

With EkkoSIM you can start derisking your data center management AI journey and establish how to deliver the AI compute your organization needs as cost-effectively as possible.

Dr. Stuart Redshaw

CTIO and Co-Founder, EkkoSense

Download this EkkoSense data center guide as a pdf