Data center management – Five key steps data centers should take to be ready for the next heatwave

A UK heatwave saw record temperatures of over 40C in July that clearly put data centre management and their associated cooling infrastructure under pressure. Reports of a ‘cooling-related failure’ at a Google building and ‘cooler units experiencing failure’ at Oracle both illustrate that even the largest operators can be impacted by unseasonably high temperatures. Perhaps of more concern is the report of two of London’s largest hospitals having to ‘move to paper’ as their IT servers broke down in record heat, leading to the declaration of a critical incident.

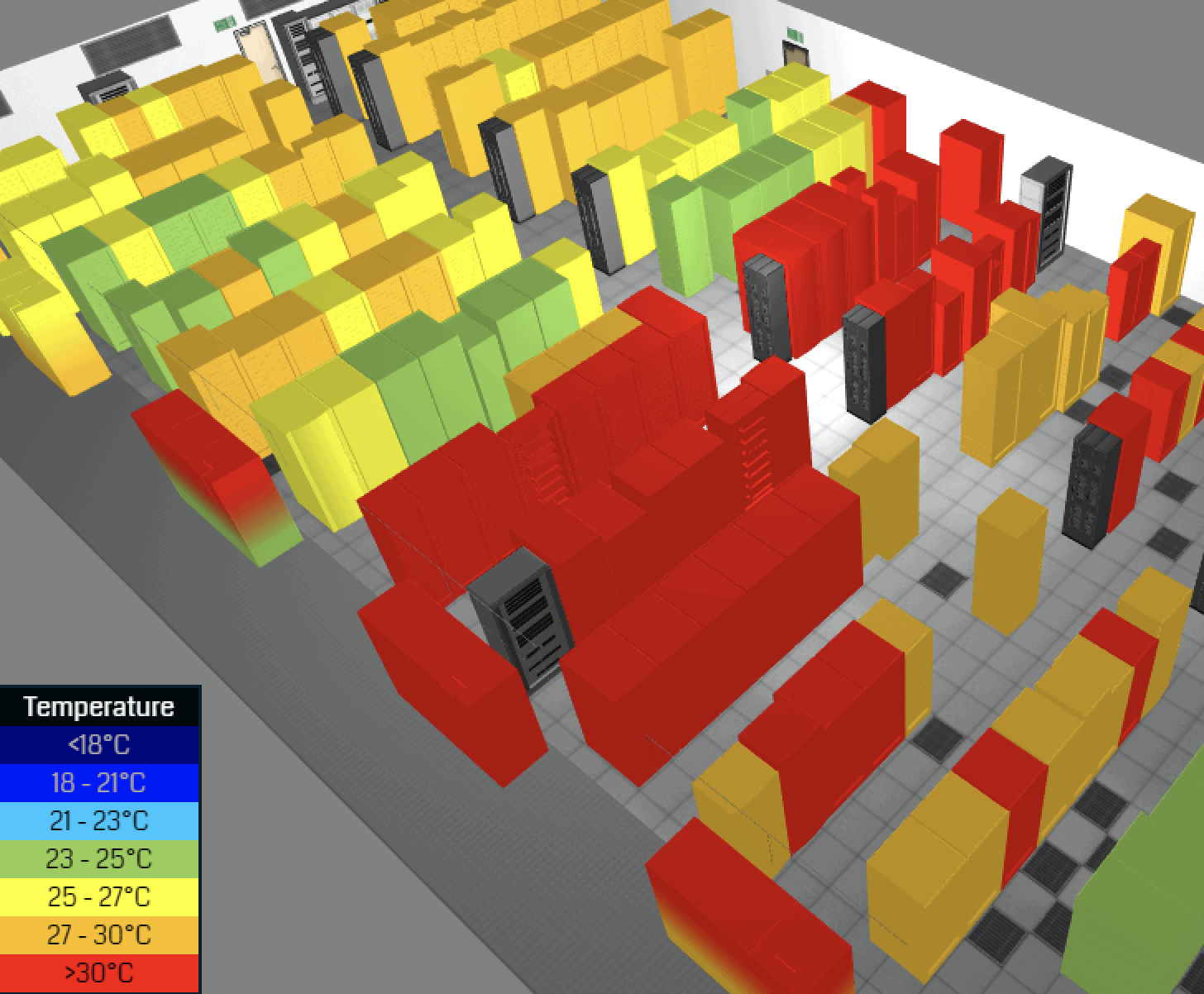

While incidents like these are always likely to attract headlines, it’s important to note that the majority of data centres – representing thousands of facilities across the country – successfully maintained service delivery despite record temperatures. From our own perspective at EkkoSense, where we are able to monitor the real-time status of thousands of racks across facilities of all sizes, it was significant that we could detect hardly any significant thermal performance anomalies in data centres that had already benefited from cooling optimisation. Indeed, we barely saw more than 1C variation within fully-optimised rooms!

That’s not to say there weren’t issues that impacted performance for some operators, but – invariably – these all stemmed from thermal performance failures outside of the data centre. There are frequent reports of FM teams having to hose down external cooling devices to prevent overheating. And that’s hardly surprising given that almost all external cooling infrastructure was never designed to perform in conditions of 35C or above.

While this kind of intervention can help to slow down an overheating infrastructure, it’s much harder for operation teams to handle more systemic failures. At Google, for example, the issue was one of a ‘simultaneous failure of multiple, redundant cooling systems’, while Oracle’s issue was because ‘two cooler units in the data centre experienced a failure when they were required to operate above their design limits’. With these kind of incidents, there’s really little option but to divert traffic and move quickly into a protective shut down.

What these data center management examples illustrate is the challenge organisations face when they can’t guarantee the performance of their external cooling infrastructure. You may be running an n+1 facility, but if two cooling units go offline at the same time you’re moving quickly towards n+2 conditions.

The extreme temperatures also introduced external failures that simply weren’t planned for. Issues reported included poorly-maintained heat exchangers where copper pipes and fine aluminium fins had simply melted in the heat trapped in an enclosed external space. Also significant were a number of incidents of high-pressure switches tripping out, causing refrigeration systems to switch off. In both these examples, equipment designed for a maximum operating environment of 35C simply wasn’t able to function correctly in temperatures up to 5C higher.

Most initial designs clearly never anticipated these kind of operating temperatures. This, coupled with the reality that many external cooling systems are often 10-15 years’ old and are operating with a degraded performance that’s commensurate with their age, goes some way to explaining why many of these failures occurred. However, the issue of external cooling systems performance clearly has a massive potential impact on the success of data center management during sustained heatwave conditions – and again focuses attention on the roles played by both IT and Facilities Management in ensuring continued operation through difficult conditions. When this then causes sites to breach their SLAs significantly, businesses are unlikely to tolerate well-practiced IT/FM turf war arguments as a justification for failure.

Extreme heat clearly affects external plant, but the impact is felt inside the data centre where things can start going wrong very quickly. If a data centre site is correctly optimised and things suddenly start going wrong from a thermal perspective, it’s hard for data center management teams not to leap to conclusions. Instead of moving quickly to identify and isolate the root cause of a temperature surge, what many operators do is to start changing things within the contact centre.

What happens is that the data center management starts to move floor tiles or reduce set-points, not realising that the root cause of the problem isn’t in the data centre. Our analysis shows that in situations like this, there’s simply no point in trying to change your DC dynamics on the hottest day of the year! From our analysis across tens of thousands of racks, we couldn’t detect any effective interventions that were carried out inside the room during the heatwave’s peak.

You can’t fix things on the day – but you can be prepared

The answer of course is you can’t fix things on the day, but you do need to be going into the summer – and any potential heatwaves – in the best condition you can. And with the likelihood of extremely hot days increasing, data centre teams need to be prepared for further such events.

There are steps that operations teams can take to ensure that when the next 40C plus event occurs they are ready. Here’s EkkoSense’s five key recommendations:

1. Conduct a pre-Summer high temperature incident audit across all your data centre sites – All your facilities will of course need to be well-optimised from a thermal, power and space perspective before going into Summer and any possible extreme heat events. Taking a detailed high temperature incident survey audit across all sites is essential, analysing not only your data centre facilities but also all your external cooling plant and other infrastructure resources and ensuring they are optimally maintained. This will require close co-operation between IT and Facilities Management teams.

2. Learn from the July 2022 heatwave – with record-breaking 40C+ temperatures, any data gathered during this unique high ambient temperature UK weather event should prove invaluable. Despite all the discussions around Climate Change, very few operators actually considered its potential impact on their data centres, with 35C still baked into specifications as an uppermost likely operating condition. These standards now need to be reset, with July 2022 serving as the extreme template. The work needed to effect this change will need to start immediately.

3. Action this Year! – Summer 2023 will come round quickly enough, so it’s important that any remedial cooling infrastructure maintenance or other improvements necessary following the heatwave are carried out as quickly as possible. This is particularly important given the many supply chain issues that are currently impacting hardware and parts orders.

4. Avoid ‘heat of the moment’ actions – extensive real-time data shows that it’s important not to interfere with an already-optimised data centre when you’re being impacted by external failure – you’ll only make things worse. Simply adjusting set-points or re-arranging floor tiles won’t make any difference at this stage.

5. Create a ‘Heatwave Grab Pack’ for things to do – develop a readily-accessible checklist that’s immediately available for when the next heatwave arrives (and it will). This should detail things to do (and not do), key contact details, pre-agreed next-step mitigation plans, as well as any plans for emergency cooling.

As ever, close dialogue between IT and Facilities Management teams is critical in ensuring the success of any data centre operator’s heatwave mitigation plans. Following these five recommendations will help to give your team a head start!