The current state of datacenter optimization – why most operators are still in the dark

An article on datacenter optimization for operators and the wider datacenter industry.

Data centers exist to house all the compute, storage, networking, and connectivity needed to run a business – not just for today, but tomorrow, five years from now, even ten years from now.

Understanding those needs isn’t easy, and it’s not done well by most operators. That’s hardly surprising. When you build a data center, it typically starts operations between 6 to 36 months after the design phase begins. Given the rate of change associated with those technologies, anticipating needs years in advance is a daunting challenge. And that’s just the start of the journey. That data center that you build is possibly going to operate for over 20 years. It’s going to go through a huge amount of change, and the planning and optimization you put in place at the start of the process will quickly become unfit for purpose as your facility evolves through multiple iterations. That’s why it’s vital that your facilities stay optimized throughout their lifecycle – overlook this and you’ll quickly end up introducing risk and unnecessary costs into the equation – factors that shouldn’t have any place in a critical facility.

The most important thing a data center operator must do is meet the needs of the business and its customers. Availability and uptime must be 100%, with services performing as required to deliver a high quality experience for both customers and internal users. The second most important thing is whether it does that efficiently – and by efficiently we mean by using as little energy and as cost-effectively as possible. However, you can’t do the second if it impacts the first. Unfortunately, the way many data centers are currently operated today, this happens all too often. Inefficient data centers introduce more risk and are typically more expensive to operate due to the lack of visibility.

Does this inefficiency matter? It sure does! Data centers already consume around 1% of the electricity used worldwide, and it’s a number that’s on the increase. Even the most conservative projections have data centers growing at 4.5% annually over the next five years. Some projections show over 20% annual growth, with key reasons being our greater dependence on online services as well as the global introduction of 5G. We can’t live without our devices; we’re using them more and more every day, and it takes greater bandwidth to ensure we experience improved performance each time we upgrade.

To support these workloads, around 35% of data center energy is taken up by cooling, and it’s estimated that around 150 billion kilowatt hours per year are being wasted through inefficiencies of cooling and airflow management in data centers. For operators, this translates to wasting over

$18 billion a year. These are big numbers, and they are not sustainable from either a cost or a carbon perspective.

The good news is that operators that implement a machine learning and AI-based approach to datacenter optimization have an opportunity to reduce these numbers. Indeed, data centers using EkkoSoft Critical software reduce their data center cooling costs by an average of 30%.

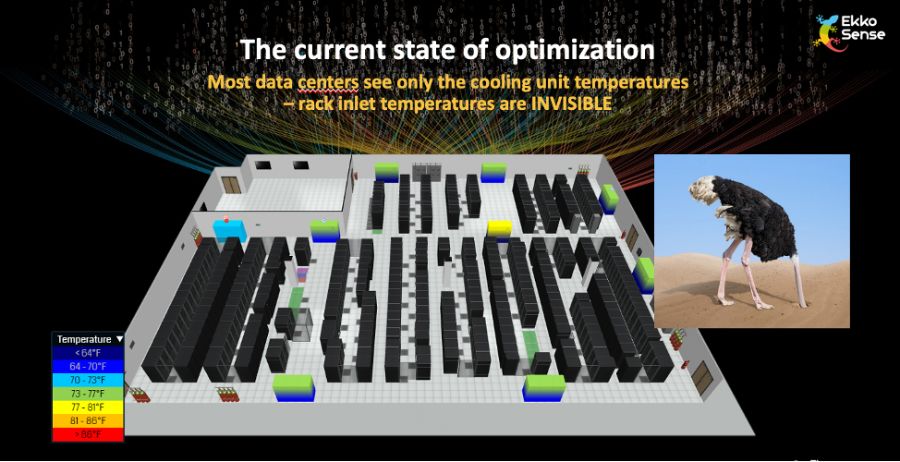

It’s difficult to unlock this kind of performance improvement unless you know exactly what’s happening in your data center. Our data shows that less than 5% of all data center operators currently measure what’s happening at the rack level in their facilities. As a result, when it comes to the actual IT cabinets, most operators are effectively blind to their data center’s thermal performance and power consumption. They know what temperature their cooling units are running at, but as far as rack inlet thermal reporting is concerned, they’re almost entirely in the dark and hoping that all their individual racks are operating within an acceptable range – whether that’s according to ASHRAE recommendations or other measures.

Given that the hardware contained within a server rack can range between $40k and $1m in cost, it’s clear that hoping each rack is performing well introduces material risk and isn’t a sound long-term strategy. EkkoSense research suggests that 15% of the cabinets within the average data center operate outside of ASHRAE standards. That’s a significant percentage and introduces a level of risk into the data center that is entirely avoidable.

In my next post I’ll explain why capturing critical data at a more granular represents an essential first step to a more effective datacenter optimization approach. You can learn more about EkkoSense’s machine learning and AI strategy here, or register for a demonstration of AI-enabled datacenter optimization in action. If you missed the first article in my series – “How AI and Machine Learning are set to change the game for data center operations – you can read it here.